Client side file uploads with Amazon S3

Diario del capitán, fecha estelar d457.y37/AB

At MarsBased, we love simplicity. Simple solutions are faster to develop, easier to test and less expensive for our clients.

Do you want to know how we solved a high concurrency file upload problem using client-side Amazon S3 file uploads? Continue reading!

One of our most recent clients needed a site to promote their 25 years anniversary event. At first, they thought to develop it on top of their Ruby powered CMS. However, after a careful analysis, we found out that they didn't really need any of the features provided by their CMS. As a promotional site, a static website was enough to fulfil their needs.

To develop it we used the same technology stack that we are currently using to host our site. A Middleman static site generator powered by Netlify deployments.

But they had one requirement that we couldn't fulfil only with Netlify. They needed a contact form where you could upload a WAV file for a contest they were organising.

Netlify provides you form processing out of the box, but they do not allow you to upload files to their servers. Also, our client was very concerned about security and wanted that the uploaded file was kept private making it impossible to use 100% client-side solutions like Filestack.

Also, they informed us that they were expecting more than 10k form submissions - 80% of them in the 48 hours after the website launch - issued from different regions (Western Europe, South America and Asia).

One of our greatest fears was the time it takes for a user to upload a WAV file (they can be up to 100MB). Long-running requests plus having too many of them concurrently was the perfect cocktail to bring our form processing service down.

Instead, we decided to let Amazon AWS take care of the file uploads directly from the user browser.

Despite the multiple libraries that you can find over the Internet to manage this use case we decided to roll out our own solution.

As we didn't need any file post-processing, this was by far the simplest way to achieve our goals. If you need to perform some processing, we recommend you to check the Carrierwave-Direct gem or ActiveStorage, the most recent addition to Rails.

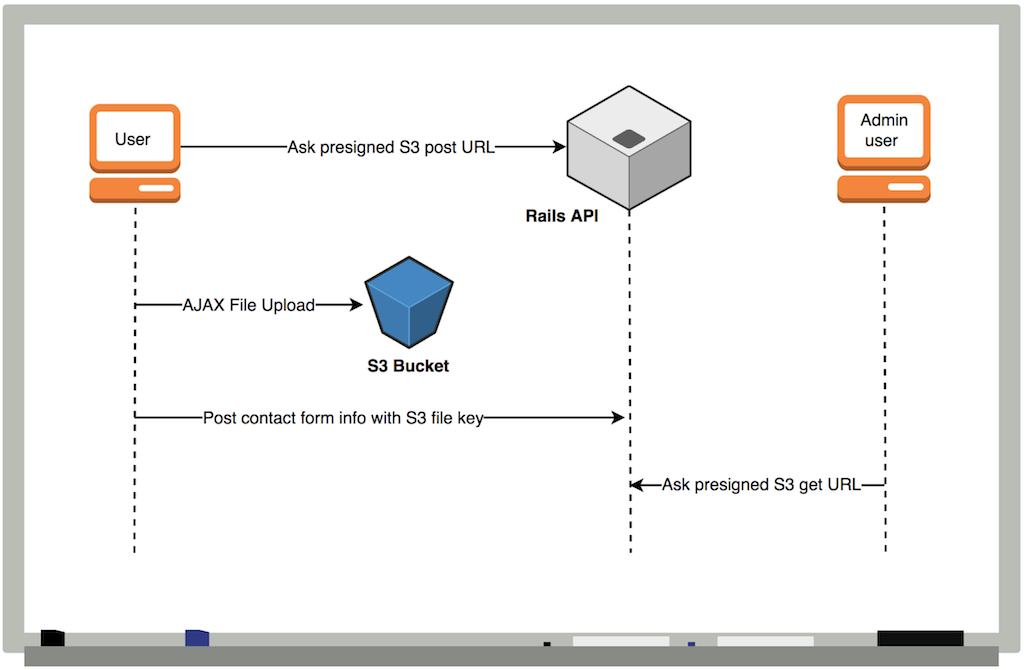

The following diagram summarises the whole workflow required to upload the file and send the contact form once the user clicks the submit button.

Now, let's stop talking and let's dive into some of the code that makes this work.

module Api

class UploadsController < ApiController

def create

s3_signed_post = s3_resource.bucket('s3-bucket-name')

.presigned_post(key: filename,

success_action_status: '201',

acl: 'private')

render json: { url: s3_signed_post.url,

form_data: s3_signed_post.fields }

end

private

def s3_resource

Aws::S3::Resource.new(

region: 'AWS_S3_REGION',

credentials: Aws::Credentials.new('AWS_ACCESS_KEY_ID',

'AWS_SECRET_ACCESS_KEY')

)

end

def filename

"uploads/#{SecureRandom.uuid}/${filename}"

end

end

end

This code belongs to a Rails controller using the aws-sdk ruby gem to generate the client-side request that will be used for the file upload. One of the good things about the aws-sdk gem is that you can decide to install the specific gem that gives you the functionality that you will use. In our case, it was the aws-sdk-s3 gem.

The filename generator is something worth watching more carefully. We are using a random value to ensure there won't be name collisions and then, we are using the ${filename} placeholder provided by S3 to maintain the original filename.

Notice the acl param. This param is important as we are using it to tell S3 that the uploaded file will be private, thus requiring authentication to be accessed. In our case, we will need to generate a signed URL every time we want to download the file outside AWS.

It's also worth mentioning that AWS returns the URL to make the post, but also a fields param containing metadata that you will need to pass when uploading the file. Among other information, it contains the key (filename) and the policy that will be used to permit the upload.

Once we have the information from the Rails API we use the jQuery File Upload

plugin to process the file upload directly from the browser and send the contact form back to the Rails application but this time, with the Amazon S3 upload key.

var formInputFile = $('form input[type="file"]');

formInputFile.fileupload({

url: url, // obtained from presigned_post server call

type: 'POST',

formData: form_data, // obtained from presigned_post server call

paramName: 'file',

dataType: 'XML',

done: fileUploadDone

});

function fileUploadDone(e, data) {

var s3ObjectKey = $(data.jqXHR.responseXML).find('Key').text();

submitContactFormWith(s3ObjectKey);

});

You can use the asynchronous file upload plugin of your choice, as long as you send the request to the URL provided by AWS with the formData param containing the AWS metadata in XML format.

Notice that the S3 response will be also formatted with XML so you'll have to parse it before being able to extract the uploaded file key that you will use to retrieve the uploaded file later. The uploaded file key will have the same value that we sent to AWS but overriding the ${filename} placeholder with the actual filename.

The submitContactFormWith(s3ObjectKey) method will add the s3ObjectKey to a hidden input field and will submit the form with the other contact information.

Once the file is uploaded to your S3 bucket, you can't access it publicly unless you authenticate the request that you'll use to download it.

In order to do so, you need to use again the aws-sdk gem from your Rails backend to obtain the pre-signed URL.

credentials = Aws::Credentials.new('AWS_ACCESS_KEY_ID',

'AWS_SECRET_ACCESS_KEY')

s3_object = Aws::S3::Object.new('bucket',

'object-s3-key',

region: 's3-bucket-region',

credentials: credentials)

s3_object.presigned_url(:get)

The presigned_url(:get) will return us an authorized URL that will serve us to download the file. It will have a format similar to:

https://my-bucket.s3.eu-west-1.amazonaws.com/uploads/8e445eba-cc5e-469a-8b57-72008f53a3d6/filename?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=xxxX-Amz-Date=20171201T084824Z&X-Amz-Expires=900&X-Amz-SignedHeaders=host&X-Amz-Signature=xxx

Notice that the URL has an expiration date beyond the one it won't be authorised anymore and does not contain any sensible security information (besides the one granting access to the requested file).

For most of your day to day file upload needs, gems like Carrierwave or Paperclip will work just fine.

But sometimes there will be special requirements that will make you roll out your custom solution. We hope

that this post will serve you as inspiration to do it.

Here's some technical post for the Rails folks out there. If you're into performance optimisation, this is for you!

Leer el artículo

MySQL and PostgreSQL are very similar but not exact. Take a look at this scenario that works with PostgreSQL but not with MySQL.

Leer el artículo

In this blog post, our CTO, Xavi, will show us how to query data from PostgreSQL to represent it in a time series graph.

Leer el artículo